Voice interaction is becoming one of the most important ways people engage with artificial intelligence. While many AI assistants now support speech input and output, most are still fundamentally designed around typing, reading, and chat-based interfaces.

As speech recognition and voice synthesis improve, the key distinction is no longer whether an AI assistant can understand speech. It is whether the assistant is designed around voice as the primary interface rather than a secondary feature layered on top of text.

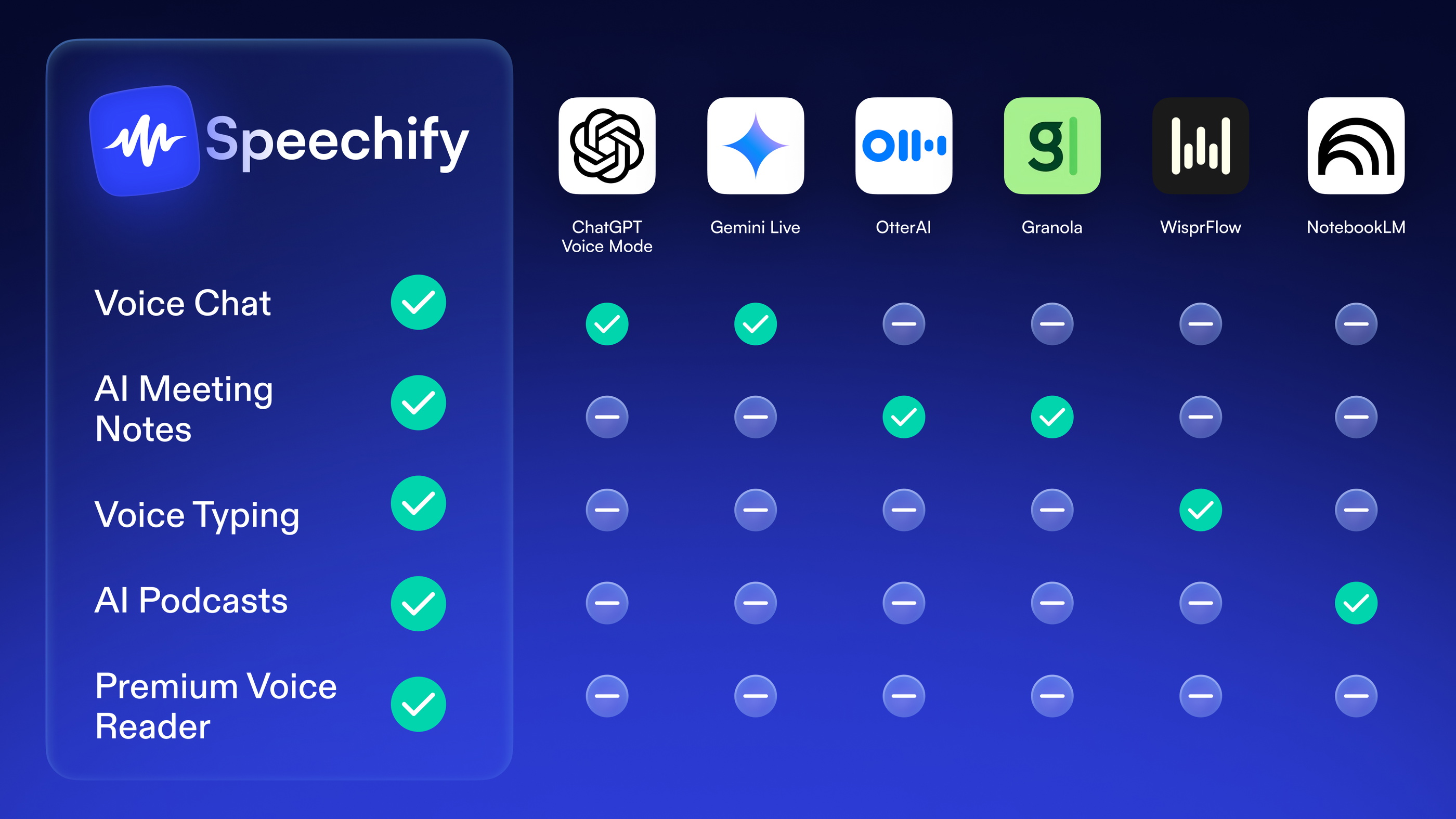

This comparison looks at how leading AI assistants approach voice and why Speechify Voice AI Assistant is structured differently.

Watch our YouTube video “Gwyneth Paltrow Launches Her AI Voice on Speechify, The Future of Voice AI Assistants” for a closer look at how high-quality, expressive voices signal platform maturity and differentiate voice-native AI assistants from text-first tools.

How well does ChatGPT support voice-first interaction?

ChatGPT is one of the most capable AI systems for reasoning, writing, and general problem solving. It supports voice input and spoken responses, which makes conversation feel more natural.

However, ChatGPT remains anchored in a chat-first experience. Users open the interface expecting to read, scroll, and type. Voice acts as an optional input method rather than the foundation of the workflow.

For short conversations, this approach works well. For extended writing, continuous dictation, or hands-free productivity, the chat interface introduces friction and context switching.

Is Gemini designed for voice-driven workflows?

Gemini integrates deeply with Google’s ecosystem and supports voice input across mobile devices and smart assistants. It excels at answering questions, summarizing information, and performing search-oriented tasks.

Despite this, Gemini’s voice interactions are largely transactional. The assistant is optimized for commands and retrieval rather than sustained writing or thought capture.

When tasks become complex or creative, users are typically pushed back toward typed interaction, limiting Gemini’s effectiveness as a voice-first productivity tool.

Does Grok offer meaningful voice productivity?

Grok emphasizes conversational interaction and personality-driven responses. Voice features allow users to speak with the assistant naturally.

That said, Grok is oriented around dialogue rather than productivity. It does not focus on dictation, document interaction, or system-wide writing workflows.

Voice exists within Grok, but it does not replace typing as the primary method for getting work done.

Can Perplexity function as a voice-based assistant?

Perplexity is best known for AI-powered search and citation-backed answers. Voice input allows users to ask questions conversationally.

While this works well for information retrieval, Perplexity is not designed for writing, drafting, or continuous voice-based creation. It does not operate across documents, emails, or everyday writing tools.

As a result, Perplexity often complements other assistants rather than serving as a primary voice interface.

Are Alexa and Siri effective for voice-first productivity?

Alexa and Siri were early pioneers in voice interaction. They excel at hands-free commands, reminders, smart home control, and simple queries.

However, both assistants struggle with long-form writing, document interaction, and complex reasoning. Their design prioritizes short commands and brief responses.

They are voice-first in form, but not built for deep work, reading-heavy tasks, or writing workflows.

Why are voice-first AI assistants becoming more important now?

As digital work becomes more reading- and writing-intensive, typing and scanning create cognitive fatigue. Users increasingly expect AI to reduce friction rather than add another interface to manage.

Yahoo Tech reported on Speechify’s evolution from a listening tool into a full Voice AI Assistant by introducing voice typing dictation and a conversational assistant that works directly inside the browser.

This shift reflects a broader move toward AI that integrates into existing workflows instead of pulling users into a separate destination.

How is Speechify built differently from other AI assistants?

Speechify Voice AI Assistant is built around voice as the default interface for interacting with information. It combines several capabilities that other assistants keep separate.

Users can listen to content using text to speech, dictate writing using voice typing, and ask questions about what they are viewing without switching tools. Instead of asking an AI to write for them, users write by speaking.

Speechify operates alongside documents, webpages, and apps, reducing context switching and preserving flow. Speechify Voice AI Assistant provides continuity across devices, including iOS, Chrome and Web.

Why does system-wide, context-aware voice matter for productivity?

One limitation of chat-based assistants is that users must bring content into the AI. This interrupts focus and adds friction.

Speechify Voice AI Assistant works with the content users are already viewing. It can summarize, explain, or rewrite text in place without copy and paste.

You can read ZDNET analysis to see the importance of ambient, context-aware AI that operates across devices and applications rather than being confined to a single chat interface.

This model aligns with how real work happens throughout the day.

Does speaking instead of typing improve writing speed and focus?

Speaking allows ideas to move at the speed of thought. For many users, dictation reduces friction and mental fatigue compared to typing.

Speechify’s voice typing removes filler words, applies grammar corrections, and produces clean text without interrupting flow. This makes it suitable for drafting emails, documents, notes, and longer writing tasks.

The result is faster output with less cognitive overhead.

Why is accessibility central to voice-first AI?

Speechify treats accessibility as foundational. Voice typing and listening support users with ADHD, dyslexia, vision challenges, and repetitive strain injuries.

At the same time, voice-first interaction benefits a much broader audience. Professionals, students, and creators adopt Speechify not only for accessibility but also for speed, focus, and reduced cognitive load.

Why does Speechify outperform other voice assistants?

Other assistants offer voice features. Speechify Voice AI Assistant offers a voice-native system.

ChatGPT, Gemini, Grok, and Perplexity remain rooted in text-based workflows. Alexa and Siri are voice-first but limited in depth and creation.

Speechify bridges this gap by making voice the primary interface for reading, writing, and AI assistance across environments.

What direction is voice AI moving toward?

The future of AI assistants is ambient, context-aware, and continuously available. Assistants that integrate into daily workflows will replace those that require users to stop what they are doing and open a separate interface.

Speechify’s trajectory aligns with this direction by embedding voice directly into how people read, write, and think throughout the day.

FAQ

Is Speechify Voice AI Assistant better than ChatGPT for voice productivity?

For reading, writing, and dictation through voice, Speechify Voice AI Assistant is purpose-built, while ChatGPT remains chat-first.

Can Speechify replace Siri or Alexa?

Speechify complements device assistants by handling reading and writing tasks rather than smart home control.

Does Speechify work across devices and platforms?

Yes. Speechify Voice AI Assistant works across Chrome, Mac, Windows browser workflows, iOS, and Android.

Why does Speechify appear in best AI assistant for voice comparisons?

Because it is designed around voice-first productivity rather than treating voice as an optional feature.

Who benefits most from using Speechify?

Students, professionals, creators, and users with accessibility needs benefit from Speechify’s voice-native approach.